"A Databservatory for Human Behavior"

Rick O. Gilmore

Support: NSF BCS-1147440, NSF BCS-1238599, NICHD U01-HD-076595

2017-02-21 12:02:28

"A Databservatory for Human Behavior"

Support: NSF BCS-1147440, NSF BCS-1238599, NICHD U01-HD-076595

Adolph, K., Tamis-LeMonda, C. & Gilmore, R.O. (2016). PLAY Project: Webinar discussions on protocol and coding. Databrary. Retrieved February 17, 2017 from https://nyu.databrary.org/volume/232

(Collaboration 2015) "…seriously underestimated reproducibility of psychological science."

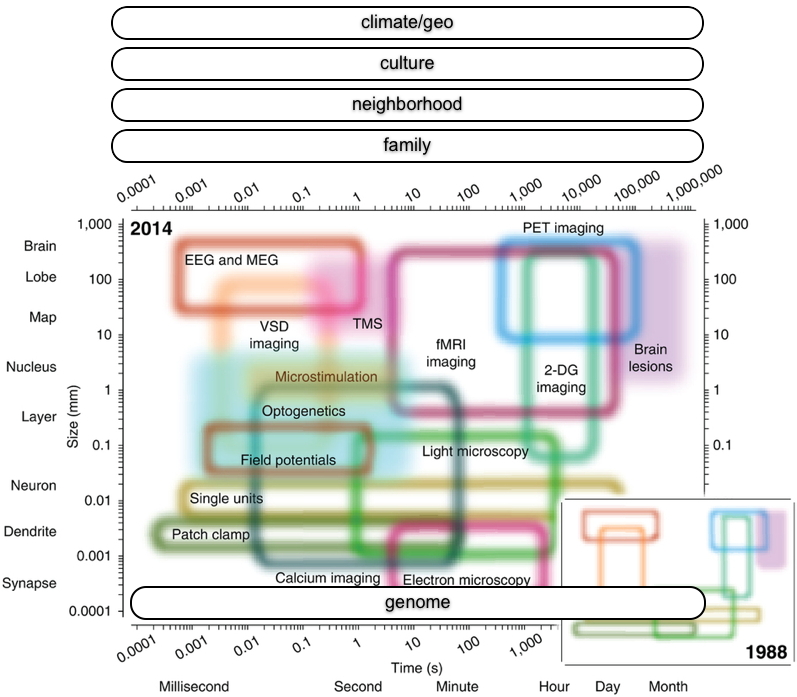

"We have empirically assessed the distribution of published effect sizes and estimated power by extracting more than 100,000 statistical records from about 10,000 cognitive neuroscience and psychology papers published during the past 5 years. The reported median effect size was d=0.93 (inter-quartile range: 0.64-1.46) for nominally statistically significant results and d=0.24 (0.11-0.42) for non-significant results. Median power to detect small, medium and large effects was 0.12, 0.44 and 0.73, reflecting no improvement through the past half-century. Power was lowest for cognitive neuroscience journals. 14% of papers reported some statistically significant results, although the respective F statistic and degrees of freedom proved that these were non-significant; p value errors positively correlated with journal impact factors. False report probability is likely to exceed 50% for the whole literature. In light of our findings the recently reported low replication success in psychology is realistic and worse performance may be expected for cognitive neuroscience."

.svg/1280px-Facebook_New_Logo_(2015).svg.png)

Wicherts, J. M., Borsboom, D., Kats, J., & Molenaar, D. (2006). The poor availability of psychological research data for reanalysis. American Psychologist, 61(7), 726–728. https://doi.org/10.1037/0003-066X.61.7.726

Amidst the recent flood of concerns about transparency and reproducibility in the behavioral and clinical sciences, we suggest a simple, inexpensive, easy-to-implement, and uniquely powerful tool to improve the reproducibility of scientific research and accelerate progress– video recordings of experimental procedures. Widespread use of video for documenting procedures could make moot disagreements about whether empirical replications truly reproduced the original experimental conditions. We call on researchers, funders, and journals to make commonplace the collection and open sharing of video-recorded procedures.

Shonkoff, J. P., & Phillips, D. A. (Eds.). (2000). From neurons to neighborhoods: The science of early childhood development. National Academies Press.

Tamis-LeMonda, C. (2013). http://doi.org/10.17910/B7CC74.

Tamis-LeMonda, C. (2013). http://doi.org/10.17910/B7CC74.

'Free' service (email, calendar, search, communications platform) vs.

Help institution, community, society

This talk was produced in RStudio version 1.0.136 on 2017-02-21. The code used to generate the slides can be found at http://github.com/gilmore-lab/cog-bbab-talk-2017-02-22/. Information about the R Session that produced the code is as follows:

sessionInfo()

## R version 3.3.2 (2016-10-31) ## Platform: x86_64-apple-darwin13.4.0 (64-bit) ## Running under: OS X El Capitan 10.11.6 ## ## locale: ## [1] en_US.UTF-8/en_US.UTF-8/en_US.UTF-8/C/en_US.UTF-8/en_US.UTF-8 ## ## attached base packages: ## [1] stats graphics grDevices utils datasets methods base ## ## other attached packages: ## [1] dplyr_0.5.0 ggplot2_2.2.1 ## ## loaded via a namespace (and not attached): ## [1] Rcpp_0.12.8 knitr_1.15.1 magrittr_1.5 munsell_0.4.3 ## [5] colorspace_1.3-2 R6_2.2.0 stringr_1.1.0 plyr_1.8.4 ## [9] tools_3.3.2 grid_3.3.2 gtable_0.2.0 DBI_0.5-1 ## [13] htmltools_0.3.5 yaml_2.1.14 lazyeval_0.2.0 rprojroot_1.1 ## [17] digest_0.6.11 assertthat_0.1 tibble_1.2 evaluate_0.10 ## [21] rmarkdown_1.3 labeling_0.3 stringi_1.1.2 scales_0.4.1 ## [25] backports_1.0.4

Baker, Monya. 2016. “1,500 Scientists Lift the Lid on Reproducibility.” Nature News 533 (7604): 452. doi:10.1038/533452a.

Begley, C. Glenn, and Lee M. Ellis. 2012. “Drug Development: Raise Standards for Preclinical Cancer Research.” Nature 483 (7391): 531–33. doi:10.1038/483531a.

Collaboration, Open Science. 2015. “Estimating the Reproducibility of Psychological.” Science 349 (6251): aac4716. doi:10.1126/science.aac4716.

Fanelli, Daniele. 2009. “How Many Scientists Fabricate and Falsify Research? A Systematic Review and Meta-Analysis of Survey Data.” PLOS ONE 4 (5): e5738. doi:10.1371/journal.pone.0005738.

Ferguson, Christopher J. 2015. “‘Everybody Knows Psychology Is Not a Real Science’: Public Perceptions of Psychology and How We Can Improve Our Relationship with Policymakers, the Scientific Community, and the General Public.” American Psychologist 70 (6): 527–42. doi:10.1037/a0039405.

Gilbert, Daniel T., Gary King, Stephen Pettigrew, and Timothy D. Wilson. 2016. “Comment on ‘Estimating the Reproducibility of Psychological Science’.” Science 351 (6277): 1037–7. doi:10.1126/science.aad7243.

Gilmore, R.O., and K.E. Adolph. 2017. “Video Can Make Science More Open, Transparent, Robust, and Reproducible,” February. http://osf.io/3kvp7.

Goodman, Steven N., Daniele Fanelli, and John P. A. Ioannidis. 2016. “What Does Research Reproducibility Mean?” Science Translational Medicine 8 (341): 341ps12–341ps12. doi:10.1126/scitranslmed.aaf5027.

Henrich, Joseph, Steven J. Heine, and Ara Norenzayan. 2010. “The Weirdest People in the World?” The Behavioral and Brain Sciences 33 (2-3): 61–83; discussion 83–135. doi:10.1017/S0140525X0999152X.

Higginson, Andrew D., and Marcus R. Munafò. 2016. “Current Incentives for Scientists Lead to Underpowered Studies with Erroneous Conclusions.” PLOS Biology 14 (11): e2000995. doi:10.1371/journal.pbio.2000995.

Lash, Timothy L. 2015. “Declining the Transparency and Openness Promotion Guidelines.” Epidemiology 26 (6). LWW: 779–80. http://journals.lww.com/epidem/Fulltext/2015/11000/Declining_the_Transparency_and_Openness_Promotion.1.aspx.

Munafò, Marcus R., Brian A. Nosek, Dorothy V. M. Bishop, Katherine S. Button, Christopher D. Chambers, Nathalie Percie du Sert, Uri Simonsohn, Eric-Jan Wagenmakers, Jennifer J. Ware, and John P. A. Ioannidis. 2017. “A Manifesto for Reproducible Science.” Nature Human Behaviour 1 (January): 0021. doi:10.1038/s41562-016-0021.

Nosek, B. A., G. Alter, G. C. Banks, D. Borsboom, S. D. Bowman, S. J. Breckler, S. Buck, et al. 2015. “Promoting an Open Research Culture.” Science 348 (6242): 1422–5. doi:10.1126/science.aab2374.

Prinz, Florian, Thomas Schlange, and Khusru Asadullah. 2011. “Believe It or Not: How Much Can We Rely on Published Data on Potential Drug Targets?” Nature Reviews Drug Discovery 10 (9): 712–12. doi:10.1038/nrd3439-c1.

Simmons, Joseph P., Leif D. Nelson, and Uri Simonsohn. 2011. “False-Positive Psychology: Undisclosed Flexibility in Data Collection and Analysis Allows Presenting Anything as Significant.” Psychological Science 22 (11): 1359–66. doi:10.1177/0956797611417632.

Szucs, Denes, and John PA Ioannidis. 2016. “Empirical Assessment of Published Effect Sizes and Power in the Recent Cognitive Neuroscience and Psychology Literature.” BioRxiv, August, 071530. doi:10.1101/071530.

Tamis-LeMonda, Catherine. 2013. “Language, Cognitive, and Socio-Emotional Skills from 9 Months Until Their Transition to First Grade in U.S. Children from African-American, Dominican, Mexican, and Chinese Backgrounds.” Databrary. doi:10.17910/B7CC74.

Vanpaemel, Wolf, Maarten Vermorgen, Leen Deriemaecker, and Gert Storms. 2015. “Are We Wasting a Good Crisis? The Availability of Psychological Research Data After the Storm.” Collabra: Psychology 1 (1). doi:10.1525/collabra.13.