2021-10-19 08:55:00

Preliminaries

About me

- Professor of Psychology

- Founding Director of Human Imaging, PSU SLEIC

- Co-Founder/Co-Director Databrary.org

- B.A. in cognitive science, Brown University

- Ph.D. in cognitive neuroscience, Carnegie Mellon University

- vision, perception & action, brain development, open science

- ham (K3ROG), actor/singer, banjo-picker, hiker/cyclist, coder

Overview

- Reproducibility in science

- Science and sin

- BS: Beyond sin

Reproducibility in science

What proportion of studies in the published scientific literature are actually true?

- 100%

- 90%

- 70%

- 50%

- 30%

How do we define what “actually true” means?

Is there a reproducibility crisis in science?

- Yes, a significant crisis

- Yes, a slight crisis

- No crisis

- Don’t know

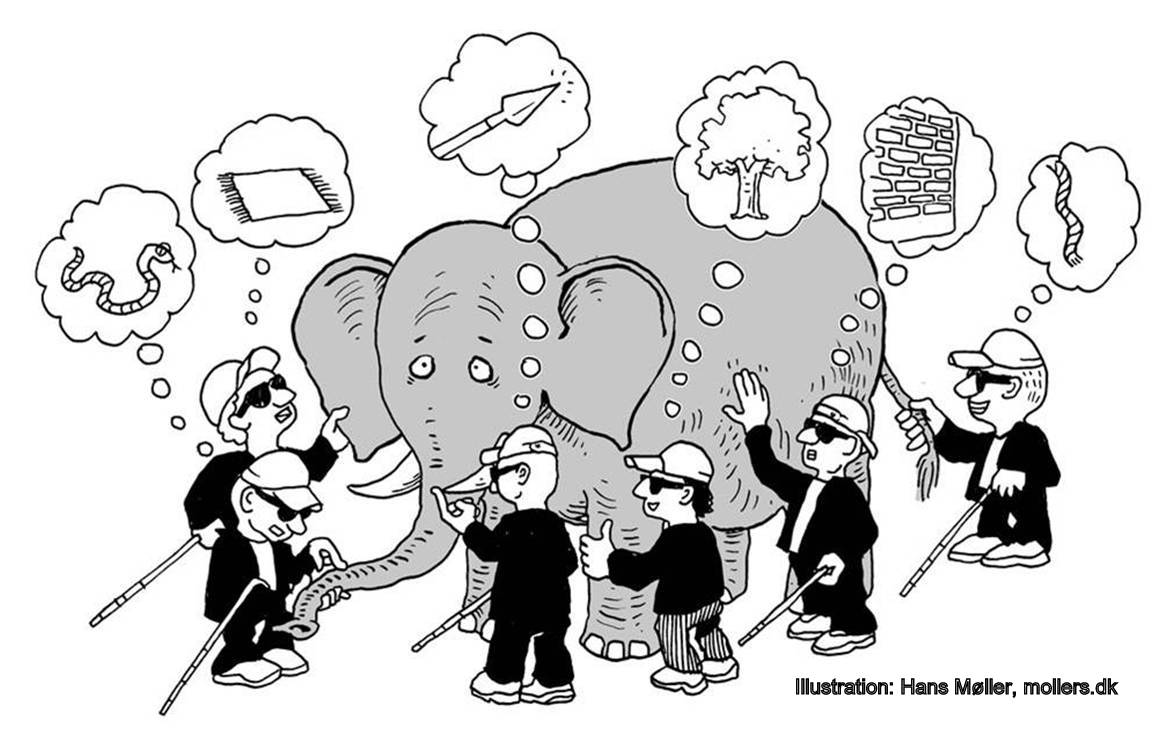

What does “reproducibility” mean?

- Are we all talking about the same thing?

Methods reproducibility

- Enough details about materials & methods recorded (& reported) so that

- Can same results with same materials & methods

Results reproducibility

- Same results from independent study

Inferential reproducibility

- Same inferences from one or more studies or reanalyses

- Meta- or mega-analyses

Factors contributing to irreproducible research

Reproducibility crisis

- Not just psychology and related behavioral sciences

- “Hard” sciences, too

- Challenges affect data collection to statistical analysis to reporting to publishing

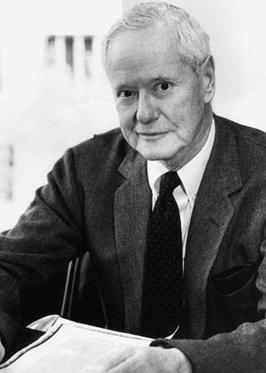

Robert Merton and The ‘Ethos’ of Science

Wikipedia

- universalism: scientific validity is independent of sociopolitical status/personal attributes of its participants

- communalism: common ownership of scientific goods (intellectual property)

- disinterestedness: scientific institutions benefit a common scientific enterprise, not specific individuals

- organized skepticism: claims should be exposed to critical scrutiny before being accepted

So, is science ‘full of it?’

- BS persuades, but (knowingly) disregards truth (Frankfurt, On Bullshit)

- Science practices and communications attempt to persuade about the truth

- But if truth value disregarded, overlooked, downplayed, exaggerated…

Science and sin

The sin of unreliability

- There are many ways to be wrong…

Studies are underpowered

“Assuming a realistic range of prior probabilities for null hypotheses, false report probability is likely to exceed 50% for the whole literature.”

- Too often our studies are too small…

The sin of hoarding…

…grants, …students, …data

Psychologists asked to share data…

(Vanpaemel, Vermorgen, Deriemaecker, & Storms, 2015)

| Response | Percent |

|---|---|

| No reply | 41% |

| Refused/unable to share data | 18% |

| No data despite promise | 4% |

| Data shared after reminder | 16% |

| Data shared after 1st request | 22% |

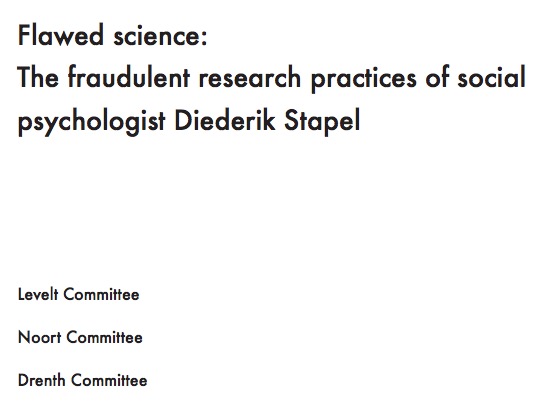

The sin of corruptibility…

The sin of bias…

Bem, D.J. (2011). Experimental evidence for anomalous retroactive influences on cognition and affect. Journal of Personality and Social Psychology, 100(3), 407-425.

“This article reports 9 experiments, involving more than 1,000 participants, that test for retroactive influence by ‘time-reversing’ well-established psychological effects so that the individual’s responses are obtained before the putatively causal stimulus events occur.”

“We argue that in order to convince a skeptical audience of a controversial claim, one needs to conduct strictly confirmatory studies and analyze the results with statistical tests that are conservative rather than liberal…”

“We conclude that Bem’s p values do not indicate evidence in favor of precognition; instead, they indicate that experimental psychologists need to change the way they conduct their experiments and analyze their data.”

The sin of hurrying…

The sin of narrowmindedness…

“…psychologists tend to treat other peoples’ theories like toothbrushes; no self-respecting individual wants to use anyone else’s.”

“The toothbrush culture undermines the building of a genuinely cumulative science, encouraging more parallel play and solo game playing, rather than building on each other’s directly relevant best work.”

The sin of pragmatism…

“Reviewers and editors want novel, interesting results. Why would I waste my time doing careful direct replications?”

“Reviewing papers is hard, unpaid work. If I have to check someone’s stats, too, I’ll quit.”

– Any number of researchers I’ve talked with

In our defense…

Behavior multidimensional

Embedded in nested networks of causal factors

Humans are diverse

But much (lab-based) data collected are from Western, Educated Industrialized, Rich, Democratic (WEIRD) populations

Data about humans are sensitive, hard(er) to share

- Must protect participant’s identities

- Most protect from harm/embarrassment

- Must anonymize and/or get permission

- Restrictions/controls in academic/biomedical research >> commercial

Psychology is harder than physics

BS: beyond sin

Beyond sin

- No physics envy, but we can learn from physics and other fields

- Openness and transparency are the means, not the ends

- How do we accelerate, broaden, and deepen discovery?

Reproducibility in psychological science

- Bigger samples

- Multiple replications

- Registration

- Data, procedure, and materials sharing

- “Data blinding”

- Larg(er)-scale replication studies

Reproducibility Project: Psychology (RPP)

“We conducted replications of 100…studies published in three psychology journals using high-powered designs and original materials when available….The mean effect size (r) of the replication effects …was half the magnitude of the mean effect size of the original effects…”

“Ninety-seven percent of original studies had significant results (P < .05). Thirty-six percent of replications had significant results.”

“39% of effects were subjectively rated to have replicated the original result…”

(Camerer et al., 2018)

If it’s too good to be true, it probably isn’t

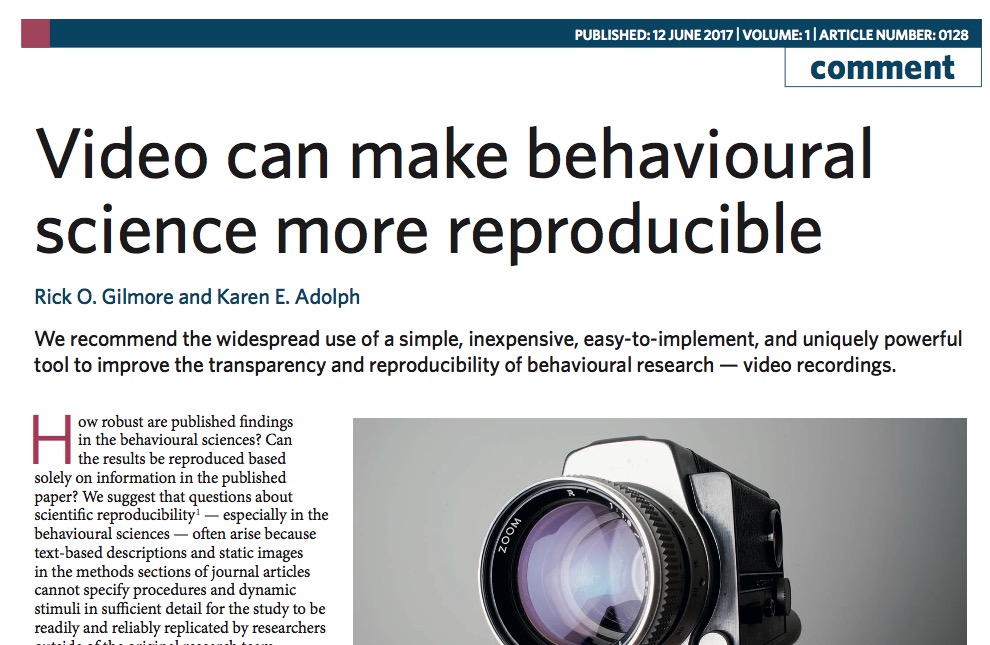

Video as data and documentation

Improved statistical practices

- Automated checking of paper statistics (in American Psychological Association formats) via Statcheck

- Redefine “statistical significance” as \(p<.005\)? (Benjamin et al., 2017)

- Or move away from NHST toward more robust and cumulative practices (Bayesian, CI/effect-size-driven) - Better capture what we know or think we know

Store data, materials, code in repositories

- Data libraries

- Funder, journal mandates for sharing increasing

- But no long-term, stable, funding sources for curation, archiving, sharing

Stuff happens

But science is still a pretty good shovel

Resources

This talk was produced on 2021-10-19 in RStudio using R Markdown. The code and materials used to generate the slides may be found at https://github.com/gilmore-lab/2021-10-19-bs-in-science/. Information about the R Session that produced the code is as follows:

## R version 4.1.0 (2021-05-18) ## Platform: x86_64-apple-darwin17.0 (64-bit) ## Running under: macOS Big Sur 11.6 ## ## Matrix products: default ## LAPACK: /Library/Frameworks/R.framework/Versions/4.1/Resources/lib/libRlapack.dylib ## ## locale: ## [1] en_US.UTF-8/en_US.UTF-8/en_US.UTF-8/C/en_US.UTF-8/en_US.UTF-8 ## ## attached base packages: ## [1] stats graphics grDevices utils datasets ## [6] methods base ## ## other attached packages: ## [1] DiagrammeR_1.0.6.1 ## ## loaded via a namespace (and not attached): ## [1] Rcpp_1.0.7 knitr_1.33 ## [3] servr_0.23 magrittr_2.0.1 ## [5] R6_2.5.0 jpeg_0.1-9 ## [7] rlang_0.4.11 highr_0.9 ## [9] stringr_1.4.0 visNetwork_2.1.0 ## [11] tools_4.1.0 websocket_1.4.1 ## [13] xfun_0.24 png_0.1-7 ## [15] jquerylib_0.1.4 htmltools_0.5.1.1 ## [17] yaml_2.2.1 digest_0.6.27 ## [19] processx_3.5.2 RColorBrewer_1.1-2 ## [21] later_1.2.0 htmlwidgets_1.5.3 ## [23] sass_0.4.0 promises_1.2.0.1 ## [25] ps_1.6.0 mime_0.11 ## [27] glue_1.4.2 evaluate_0.14 ## [29] rmarkdown_2.9 stringi_1.7.3 ## [31] compiler_4.1.0 bslib_0.2.5.1 ## [33] jsonlite_1.7.2 pagedown_0.15 ## [35] httpuv_1.6.1

References

Baker, M. (2016). 1,500 scientists lift the lid on reproducibility. Nature News, 533(7604), 452. https://doi.org/10.1038/533452a

Camerer, C. F., Dreber, A., Holzmeister, F., Ho, T.-H., Huber, J., Johannesson, M., … Wu, H. (2018). Evaluating the replicability of social science experiments in nature and science between 2010 and 2015. Nature Human Behaviour, 1. https://doi.org/10.1038/s41562-018-0399-z

Collaboration, O. S. (2015). Estimating the reproducibility of psychological. Science, 349(6251), aac4716. https://doi.org/10.1126/science.aac4716

Goodman, S. N., Fanelli, D., & Ioannidis, J. P. A. (2016). What does research reproducibility mean? Science Translational Medicine, 8(341), 341ps12–341ps12. https://doi.org/10.1126/scitranslmed.aaf5027

LaCour, M. J., & Green, D. P. (2014). When contact changes minds: An experiment on transmission of support for gay equality. Science, 346(6215), 1366–1369. https://doi.org/10.1126/science.1256151

Mischel, W. (2011). Becoming a cumulative science. APS Observer, 22(1). Retrieved from https://www.psychologicalscience.org/observer/becoming-a-cumulative-science

Munafò, M. R., Nosek, B. A., Bishop, D. V. M., Button, K. S., Chambers, C. D., Sert, N. P. du, … Ioannidis, J. P. A. (2017). A manifesto for reproducible science. Nature Human Behaviour, 1, 0021. https://doi.org/10.1038/s41562-016-0021

Nuijten, M. B., Hartgerink, C. H. J., Assen, M. A. L. M. van, Epskamp, S., & Wicherts, J. M. (2015). The prevalence of statistical reporting errors in psychology (1985–2013). Behavior Research Methods, 1–22. https://doi.org/10.3758/s13428-015-0664-2

Szucs, D., & Ioannidis, J. P. A. (2017). Empirical assessment of published effect sizes and power in the recent cognitive neuroscience and psychology literature. PLoS Biology, 15(3), e2000797. https://doi.org/10.1371/journal.pbio.2000797

Vanpaemel, W., Vermorgen, M., Deriemaecker, L., & Storms, G. (2015). Are we wasting a good crisis? The availability of psychological research data after the storm. Collabra, 1(1). https://doi.org/10.1525/collabra.13

Wagenmakers, E.-J., Wetzels, R., Borsboom, D., & Maas, H. L. J. van der. (2011). Why psychologists must change the way they analyze their data: The case of psi: Comment on bem (2011). J. Pers. Soc. Psychol., 100(3), 426–432. https://doi.org/10.1037/a0022790

Wicherts, J. M., Borsboom, D., Kats, J., & Molenaar, D. (2006). The poor availability of psychological research data for reanalysis. American Psychologist, 61(7), 726–728. https://doi.org/10.1037/0003-066X.61.7.726

![[[@baker_1500_2016]](http://doi.org/10.1038/533452a)](https://media.springernature.com/w300/springer-static/image/art%3A10.1038%2F533452a/MediaObjects/41586_2016_BF533452a_Fige_HTML.jpg?as=webp)

![[[@baker_1500_2016]](http://doi.org/10.1038/533452a)](http://www.nature.com/polopoly_fs/7.36719.1464174488!/image/reproducibility-graphic-online4.jpg_gen/derivatives/landscape_630/reproducibility-graphic-online4.jpg)

![[[@wicherts_poor_2006]](http://doi.org/10.1037/0003-066X.61.7.726)](https://raw.githubusercontent.com/gilmore-lab/psu-data-repro-bootcamp-2017-07-10/master/img/wicherts_2006_amp_61_7_726_fig1a.jpg)

![[[@lacour_when_2014]](http://doi.org/10.1126/science.1256151)](https://raw.githubusercontent.com/gilmore-lab/psu-data-repro-bootcamp-2017-07-10/master/img/lacour-green.jpg)

![[[@Nuijten2015-ul]](https://doi.org10.3758/s13428-015-0664-2)](https://static-content.springer.com/image/art%3A10.3758%2Fs13428-015-0664-2/MediaObjects/13428_2015_664_Fig3_HTML.gif)

![[[@munafo_manifesto_2017]](http://doi.org/10.1038/s41562-016-0021)](https://media.springernature.com/full/springer-static/image/art%3A10.1038%2Fs41562-016-0021/MediaObjects/41562_2016_Article_BFs415620160021_Fig1_HTML.jpg?as=webp)

![[[@Camerer2018-tr]](Camerer2018-tr)](https://mfr.osf.io/export?url=https://osf.io/fg4d3/?action=download%26mode=render%26direct%26public_file=True&initialWidth=711&childId=mfrIframe&parentTitle=OSF+%7C+F1+-+EffectSizes.png&parentUrl=https://osf.io/fg4d3/&format=2400x2400.jpeg)

![[[@Camerer2018-tr]](Camerer2018-tr)](https://mfr.osf.io/export?url=https://osf.io/cefq7/?action=download%26mode=render%26direct%26public_file=True&initialWidth=680&childId=mfrIframe&parentTitle=OSF+%7C+F4+-+PeerBeliefs.png&parentUrl=https://osf.io/cefq7/&format=2400x2400.jpeg)